Cisco APIC 6.0(2h) released

New software features

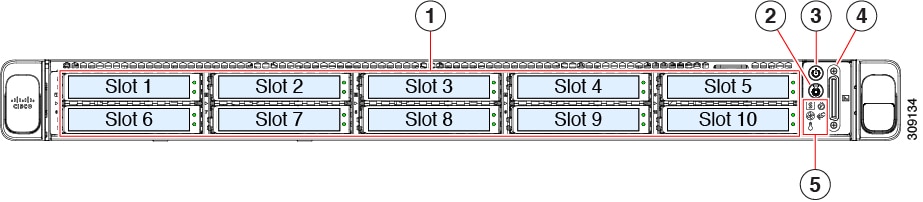

Support for Cisco APIC-M4/L4 servers

This release adds support for the APIC-L4 and APIC-M4 servers.

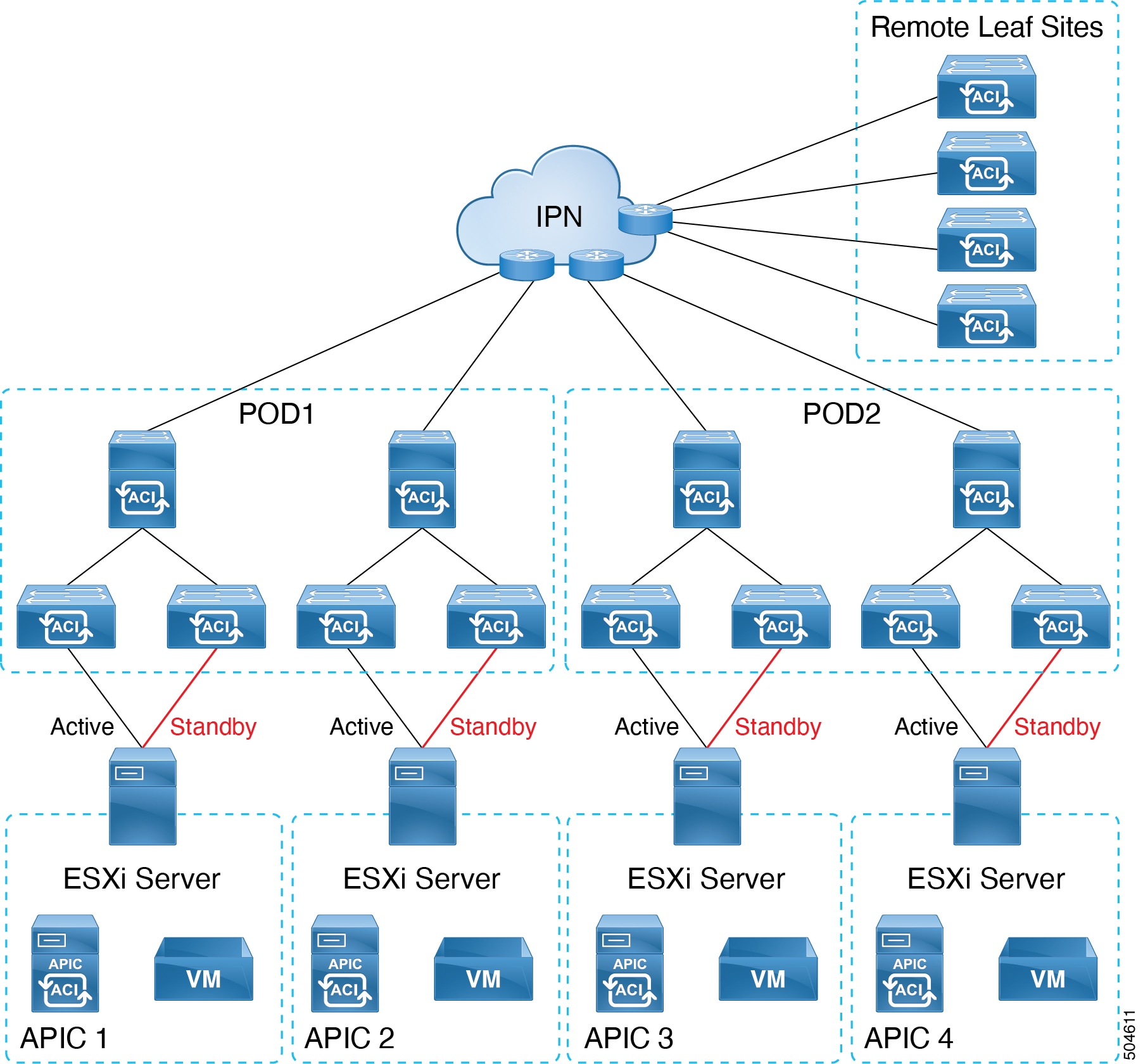

Support for Cisco APIC virtual form factor in ESXi

You can deploy a Cisco APIC cluster wherein all the Cisco APICs in the cluster are virtual APICs. You can deploy a virtual APIC on an ESXi using the OVF template.

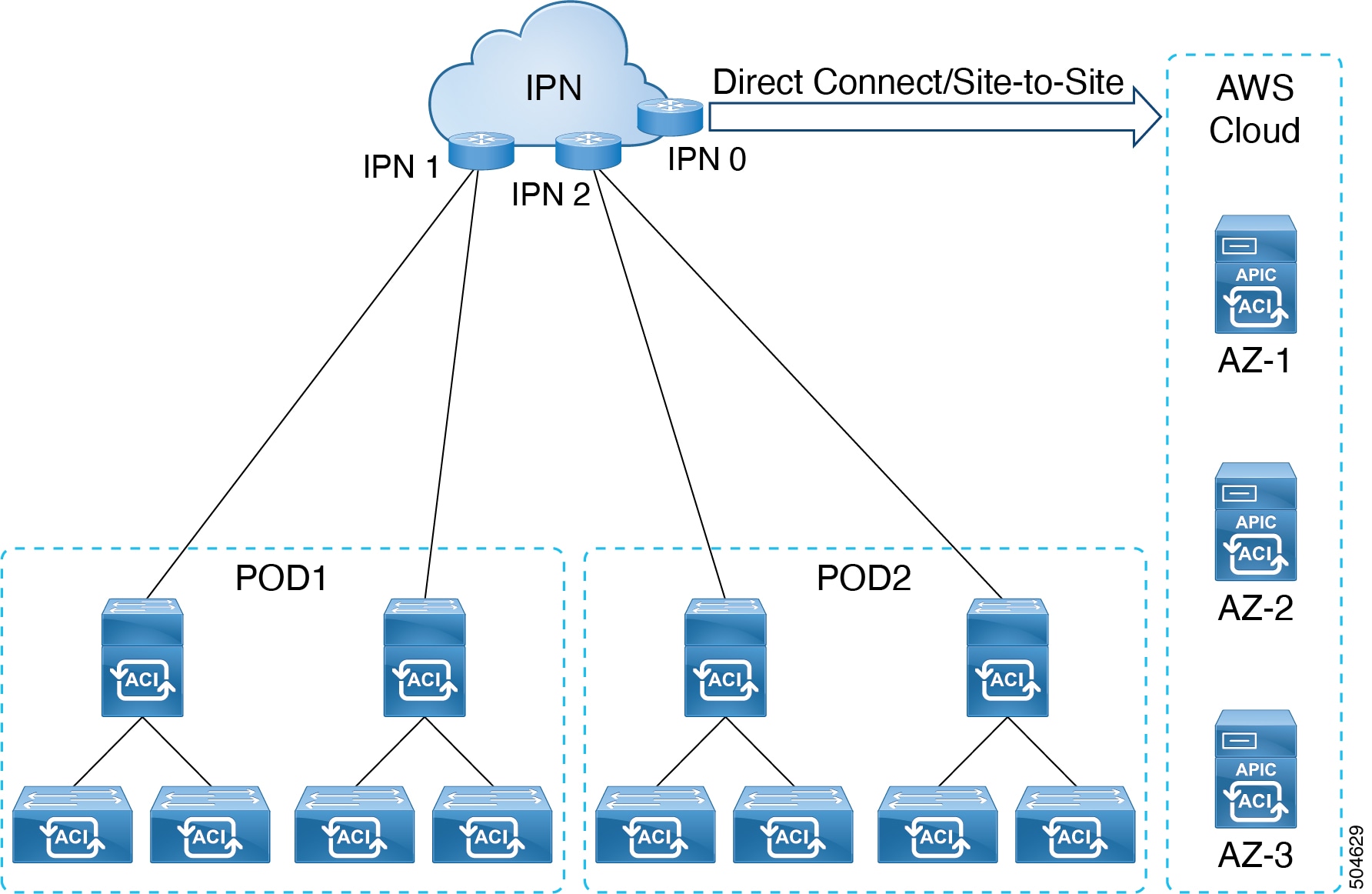

Support for Cisco APIC cloud form factor using AWS

You can deploy a Cisco APIC cluster wherein all the Cisco APICs in the cluster are virtual APICs. You can deploy a virtual APIC on AWS using the CloudFormation template.

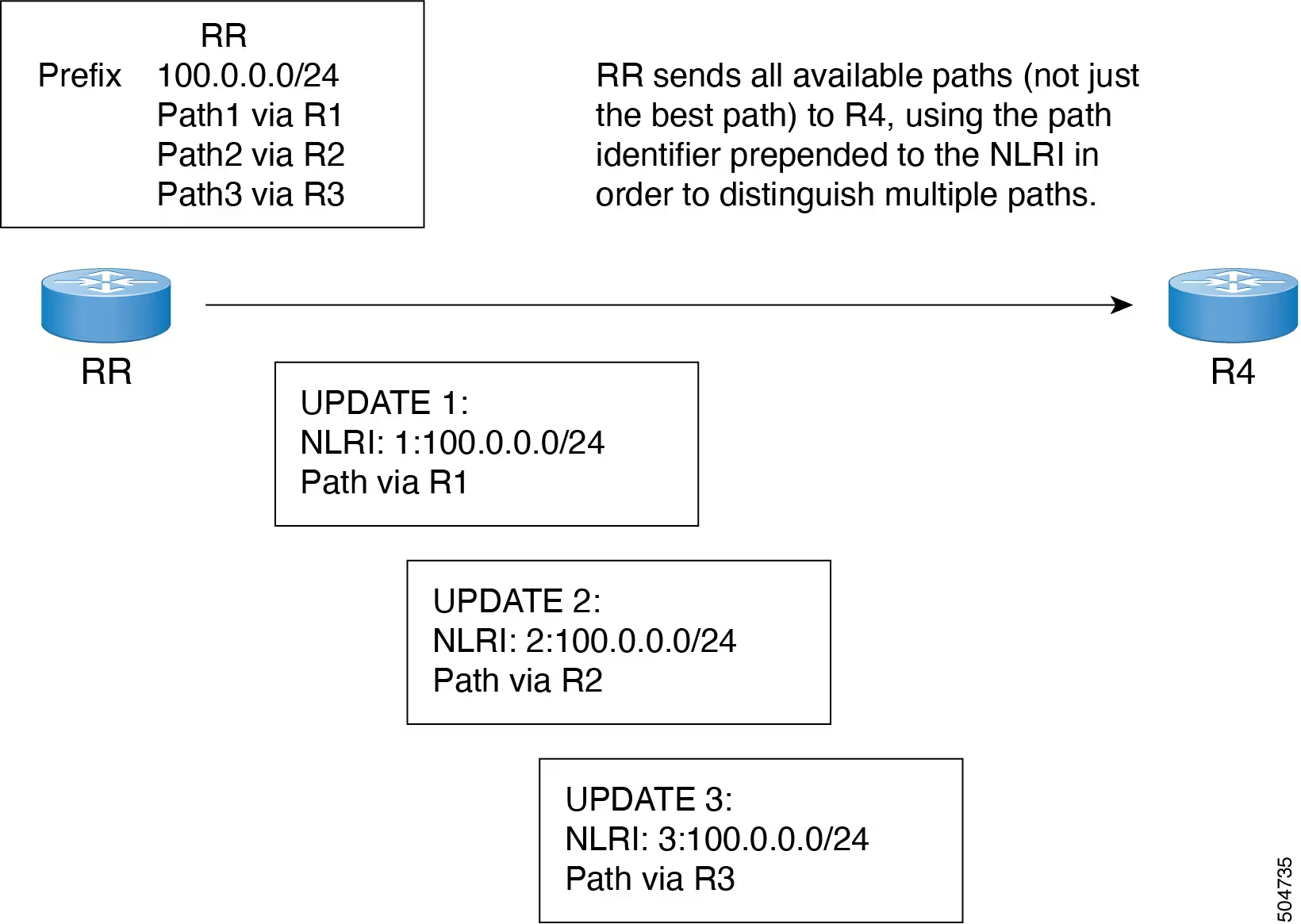

BGP additional paths

Beginning with the Cisco Application Policy Infrastructure Controller (APIC) 6.0(2) release, BGP supports the additional-paths feature, which allows the BGP speaker to propagate and receive multiple paths for the same prefix without the new paths replacing any previous paths. This feature allows BGP speaker peers to negotiate whether the peers support advertising and receiving multiple paths per prefix and advertising such paths. A special 4-byte path ID is added to the network layer reachability information (NLRI) to differentiate multiple paths for the same prefix sent across a peer session.

The following limitations apply:

- Cisco APIC supports only the receive functionality.

- If you configure the BGP additional-paths receive feature after the session is established, the configuration takes effect on the next session flap.

Prior to the additional-paths receive feature, BGP advertised only one best path, and the BGP speaker accepted only one path for a given prefix from a given peer. If a BGP speaker received multiple paths for the same prefix within the same session, BGP used the most recent advertisement.

Proportional ECMP

In Cisco Application Centric Infrastructure (ACI), all next-hops connected to a border leaf switch are considered as one equal-cost multi-path (ECMP) routing path when forwarded in hardware. Cisco ACI does not redistribute ECMP paths into BGP for directly connected next-hops, but does for recursive next-hops.

In the following example, border leaf switches 1 and 2 advertise the 10.1.1.0/24 route using the next-hop propagate and redistribute attached host features:

10.1.1.0/24

through 192.168.1.1 (border leaf switch 1) -> ECMP path 1

through 192.168.1.2 (border leaf switch 1) -> ECMP path 1

through 192.168.1.3 (border leaf switch 1) -> ECMP path 1

through 192.168.1.4 (border leaf switch 2) -> ECMP path 2- ECMP path 1: 50% (next-hops

192.168.1.1,192.168.1.2,192.168.1.3) - ECMP path 2: 50% (next-hop

192.168.1.4)

The percentage of traffic hashing on each next-hop is an approximation. The actual percentage varies.

This route entry on a non-border leaf switch results in two ECMP paths from the non-border leaf to each border leaf switch. This can result in disproportionate load balancing to the border leaf switches if the next-hops are not evenly distributed across the border leaf switches that advertise the routes.

Beginning with Cisco ACI release 6.0(2), you can use the next-hop propagate and redistribute attached host features to avoid sub-optimal routing in the Cisco ACI fabric. When these features are enabled, packet flows from a non-border leaf switch are forwarded directly to the leaf switch connected to the next-hop address. All next-hops are now used for ECMP forwarding from the hardware. In addition, Cisco ACI now redistributes ECMP paths into BGP for both directly connected next-hops and recursive next-hops.

In the following example, leaf switches 1 and 2 advertise the 10.1.1.0/24 route with the next-hop propagate and redistribute attached host features:

10.1.1.0/24

through 192.168.1.1 (border leaf switch 1) -> ECMP path 1

through 192.168.1.2 (border leaf switch 1) -> ECMP path 2

through 192.168.1.3 (border leaf switch 1) -> ECMP path 3

through 192.168.1.4 (border leaf switch 2) -> ECMP path 4- ECMP path 1: 25% (next-hop

192.168.1.1) - ECMP path 2: 25% (next-hop

192.168.1.2) - ECMP path 3: 25% (next-hop

192.168.1.3) - ECMP path 4: 25% (next-hop

192.168.1.4)

Support for config stripe winner policies

When there are multiple PIM enabled border leaf switches in a VRF, the default behavior is to select one border leaf switch as the stripe winner for a multicast group for PIM-SM or group and source for PIM-SSM. The border leaf selected as the stripe winner will act as the last hop router (LHR) for the group and send PIM join/prune messages on the externally connected links towards external sources, see Multiple Border Leaf Switches as Designated Forwarder. The border leaf selected as the stripe winner can be any border leaf in any pod across the fabric. This default behavior may result in additional latency for multicast streams in the following scenarios.

- All or most receivers for a known multicast group or group range will be connected in one pod. If the stripe winner for the group is elected in a different pod, the multicast stream from external sources will be forwarded across the IPN resulting in added latency.

- External multicast source(s) are in the same physical location as one of the pods. If the stripe winner for the multicast group is selected in a different pod, this may also add additional latency for the flow as the flows would need to traverse the external network to reach the border leaf in the remote pod and then traverse the IPN to reach receivers in the pod closest to the source.

Beginning with ACI release 6.0(2), the fabric supports a configurable stripe winner policy where you can select a pod for a specific multicast group, group range and/or source, source range. This will ensure that the border leaf elected as the stripe winner is from the selected pod solving the scenarios described above.

This feature also supports the option to exclude any remote leaf switches. When this option is enabled, remote leaf switches with PIM enabled L3Outs will be excluded from the stripe winner election.

Config Based Stripe Winner Election Guidelines and Requirements:

- Only the BLs in the POD are considered for stripe winner election, contrary to the case where all BLs from all PODs would have been considered if this configuration is not present.

- Amongst the BLs in the POD, only one BL will be elected as the config based stripe winner.

- If you select the exclude RL option, then the RLs will be excluded from the config stripe winner election.

- All BLs in the POD will be considered candidates for being the stripe and the regular stripe winner to elect one BL (in the POD) as the stripe winner.

- If there are no BLs in the configured POD or if none of the BLs are candidates for config stripe winner election, then the election will switch to a default stripe winner election logic, which is considering all BLs in all PODs as candidates.

- When you perform a VRF delete and re-add operation do not add the config stripe winner configuration back with the VRF configuration.

- You must add the VRF configuration first and then add the config stripe winner configuration after four minutes.

- The config stripe winner may result in a scenario where the configured

(S,G)stripe winner is a different border leaf than the(*,G)stripe winner. In this case, the BL that is the(*,G)stripe winner will also install an(S,G)mroute. Both the configured(S,G)stripe winner and the(*,G)stripe winner will receive multicast traffic from the external source but only the configured(S,G)stripe winner will forward multicast into the fabric. - Overlapping address ranges are not supported. For example, if

224.1.0/16is already configured then you cannot configure224.1.0/24. However, you can have any number of configurations with different source ranges for224.1.0/16. - Config stripe winner policy is not supported for IPv6 multicast.

- The maximum number of ranges that can be configured is 500 per VRF.

- Config stripe winner policy is not supported with Inter-VRF Multicast in ACI release 6.0(2).

First hop security (FHS) support for VMM

First-Hop Security (FHS) features enable a better IPv4 and IPv6 link security and management over the layer 2 links. In a service provider environment, these features closely control address assignment and derived operations, such as Duplicate Address Detection (DAD) and Address Resolution (AR).

The following supported FHS features secure the protocols and help build a secure endpoint database on the fabric leaf switches, that are used to mitigate security threats such as MIM attacks and IP thefts:

- ARP Inspection—allows a network administrator to intercept, log, and discard ARP packets with invalid MAC address to IP address bindings.

- ND Inspection—learns and secures bindings for stateless autoconfiguration addresses in Layer 2 neighbor tables.

- DHCP Inspection—validates DHCP messages received from untrusted sources and filters out invalid messages.

- RA Guard—allows the network administrator to block or reject unwanted or rogue router advertisement (RA) guard messages.

- IPv4 and IPv6 Source Guard—blocks any data traffic from an unknown source.

- Trust Control—a trusted source is a device that is under your administrative control. These devices include the switches, routers, and servers in the Fabric. Any device beyond the firewall or outside the network is an untrusted source. Generally, host ports are treated as untrusted sources.

FHS features provide the following security measures:

- Role Enforcement—Prevents untrusted hosts from sending messages that are out of the scope of their role.

- Binding Enforcement—Prevents address theft.

- DoS Attack Mitigations—Prevents malicious end-points to grow the end-point database to the point where the database could stop providing operation services.

- Proxy Services—Provides some proxy-services to increase the efficiency of address resolution.

FHS features are enabled on a per-tenant bridge domain (BD) basis. As the bridge domain, may be deployed on a single or across multiple leaf switches, the FHS threat control and mitigation mechanisms cater to a single switch and multiple switch scenarios.

Beginning with Cisco APIC release 6.0(2), FHS is supported on the VMware DVS VMM domain. If you need to implement FHS within an EPG, enable intra-EPG isolation. If intra-EPG isolation is not enabled, then, the endpoints within the same VMware ESX port-group can bypass FHS. If you do not enable intra-EPG isolation, FHS features still take effect for endpoints that are in different port-groups, for instance, FHS can prevent a compromised VM from poisoning the ARP table of another VM in a different port-group.

TACACS external logging for switches

Terminal Access Controller Access Control System (TACACS) and Terminal Access Controller Access Control System Plus (TACACS+) are simple security protocols that provide centralized validation of users attempting to gain access to network devices. TACACS+ furthers this capability by separating the authentication, authorization, and accounting functions in modules, and encrypting all traffic between the network-attached storage (NAS) and the TACACS+ daemon.

TACACS external logging collects AAA data from a configured fabric-wide TACACS source and delivers it to one or more remote destination TACACS servers, as configured in a TACACS destination group. The collected data includes AAA session logs (SessionLR) such as log-ins, log-outs, and time ranges, for every Cisco Application Policy Infrastructure Controller (APIC) user, as well as AAA modifications (ModLR) such as the addition of a new user or a password change. Additionally, all configuration changes are logged and include the user ID and time stamp.

Beginning with the Cisco APIC 6.0(2) release, you can enable TACACS external logging for switches. When enabled, the Cisco APIC collects the same types of AAA data from the switches in the chosen TACACS monitoring destination group.

Scale enhancements

- 10,000 VRF instances per fabric

- Mis-Cabling Protocol (MCP): 2,000 VLANs per interface and 12,000 logical ports (port x VLAN) per leaf switch

- 200 IP SLA probes per leaf switch

- 24 leaf switches (12 pairs) in the same L3Out

- 2,000 sub-interfaces (BGP, OSPF, and static)

- 2,000 bidirectional forwarding detection (BFD) sessions

- Longest Prefix Matches (LPM): 440,000 IPv4 and 100,000 IPv6 routes

Auto firmware update for Cisco APIC on discovery

Beginning with the Cisco APIC 6.0(2) release, when you add a new Cisco APIC to the fabric either through Product Returns & Replacements (RMA), cluster expansion, or commission, the Cisco APIC is automatically upgraded to the same version of the existing cluster. As the new Cisco APIC goes through the upgrade process, the Cisco APIC may take additional time to be upgraded and join the cluster. If the auto upgrade fails, a fault is raised and you will be alerted.

Prerequisites and conditions for the automatic Cisco APIC firmware update on discovery feature:

- All the commissioned Cisco APICs in the cluster must be running the same version, that is 6.0(2) or newer.

- The Cisco APIC image of the same version as the commissioned APICs in the cluster should be available in the firmware repository of the commissioned APICs in the cluster.

- The CIMC IP address of the new APIC must be configured and reachable from the commissioned APICs in the cluster.

- Initial Setup Utility via the APIC console that sets the fabric name, APIC ID and so on, must be completed on the new APIC if the new APIC is running a version older than 6.0(2). If the new APIC is also running 6.0(2) or newer, there is no need to perform Initial Setup Utility.

- Auto Firmware Update on APIC discovery is supported only on Cisco APIC version 4.2(1) and later.

Installing switch software maintenance upgrade patches without reloading

Some switch software maintenance upgrade (SMU) patches do not require you to reload the switch after you install those patches.

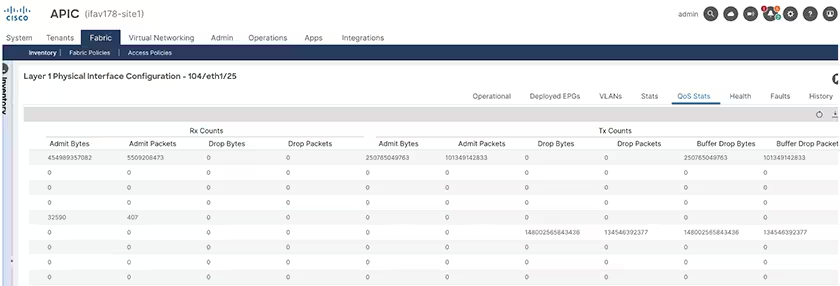

Troubleshooting Cisco APIC QoS Policies

You can view the QoS statistics by using the Cisco APIC GUI.

New hardware features

Changes in behavior

To upgrade to this release, you must perform the following procedure:

- Download the 6.0(2) Cisco APIC image and upgrade the APIC cluster to the 6.0(2) release. Before this step is completed, DO NOT download the Cisco ACI-mode switch images to the APIC.

- Download both the 32 bit and 64 bit images to the Cisco APIC. Downloading only one of the images may result in errors during the upgrade process.

- Create the maintenance groups and trigger the upgrade procedure as usual. Cisco APIC automatically deploys the correct image to the respective switch during the upgrade process.

On the "Interface Configuration" GUI page (Fabric > Access Policies > Interface Configuration), the node table now contains the following columns:

- Interface Description: The user-entered description of the interface. You can edit the description by clicking … and choosing Edit Interface Configuration.

- Port Direction: The direction of the port. Possible values are "uplink," "downlink," and "default." The default value is "default," which indicates that the port uses its default direction. The other values display if you converted the port from uplink to downlink or downlink to uplink.

- There is now a "Switch Configuration" GUI page (Fabric > Access Policies > Switch Configuration) that shows information about the leaf and spine switches controlled by the Cisco APIC. This page also enables you to modify a switch's configuration to create an access policy group and fabric policy group, or to remove the policy groups from 1 or more nodes. This page is similar to the "Interface Configuration" GUI page that existed previously, but is for switches.

- You can no longer use telnet to connect to the management IP address of a Cisco APIC or Cisco ACI-mode switch.

- The "Images" GUI page (Admin > Firmware > Images) now includes a "Platform Type" column, which specifies whether a switch image is 64-bit or 32-bit. This column does not apply to Cisco APIC images.

- The initial cluster setup and bootstrapping procedure has been simplified with the introduction of the APIC Cluster Bringup GUI. The APIC Cluster Bringup GUI supports virtual and physical APIC platforms.

- When you configure a custom certificate for Cisco ACI HTTPS access, you can now choose the elliptic-curve cryptography (ECC) key type. Prior to this release, RSA was the only key type.

Resolved issues

CSCvz72941

While performing ID recovery, id-import gets timed out. Due to this, ID recovery fails.

CSCwc66053

Preconfiguration validations for L3Outs that occur whenever a new configuration is pushed to the Cisco APIC might not get triggered.

Compatibility information

This release supports the following Cisco APIC servers:

- APIC-L2 - Cisco APIC with large CPU, hard drive, and memory configurations (more than 1000 edge ports)

- APIC-L3 - Cisco APIC with large CPU, hard drive, and memory configurations (more than 1200 edge ports)

- APIC-L4 - Cisco APIC with large CPU, hard drive, and memory configurations (more than 1200 edge ports)

- APIC-M2 - Cisco APIC with medium-size CPU, hard drive, and memory configurations (up to 1000 edge ports)

- APIC-M3 - Cisco APIC with medium-size CPU, hard drive, and memory configurations (up to 1200 edge ports)

- APIC-M4 - Cisco APIC with medium-size CPU, hard drive, and memory configurations (up to 1200 edge ports)

Cisco NX-OS

16.0(2)

Cisco UCS Manager

2.2(1c) or later is required for the Cisco UCS Fabric Interconnect and other components, including the BIOS, CIMC, and the adapter.

CIMC HUU ISO

- 4.2(2a) CIMC HUU ISO (recommended) for UCS C220/C240 M5 (APIC-L3/M3)

- 4.1(3f) CIMC HUU ISO for UCS C220/C240 M5 (APIC-L3/M3)

- 4.1(3d) CIMC HUU ISO for UCS C220/C240 M5 (APIC-L3/M3)

- 4.1(2k) CIMC HUU ISO (recommended) for UCS C220/C240 M4 (APIC-L2/M2)

- 4.1(2g) CIMC HUU ISO for UCS C220/C240 M4 (APIC-L2/M2)

- 4.1(2b) CIMC HUU ISO for UCS C220/C240 M4 (APIC-L2/M2)

- 4.1(1g) CIMC HUU ISO for UCS C220/C240 M4 (APIC-L2/M2) and M5 (APIC-L3/M3)

- 4.1(1f) CIMC HUU ISO for UCS C220 M4 (APIC-L2/M2) (deferred release)

- 4.1(1d) CIMC HUU ISO for UCS C220 M5 (APIC-L3/M3)

- 4.1(1c) CIMC HUU ISO for UCS C220 M4 (APIC-L2/M2)

- 4.0(4e) CIMC HUU ISO for UCS C220 M5 (APIC-L3/M3)

- 4.0(2g) CIMC HUU ISO for UCS C220/C240 M4 and M5 (APIC-L2/M2 and APIC-L3/M3)

- 4.0(1a) CIMC HUU ISO for UCS C220 M5 (APIC-L3/M3)

- 3.0(4d) CIMC HUU ISO for UCS C220/C240 M3 and M4 (APIC-L2/M2)

- 3.0(3f) CIMC HUU ISO for UCS C220/C240 M4 (APIC-L2/M2)

- 2.0(13i) CIMC HUU ISO

- 2.0(9c) CIMC HUU ISO

- 2.0(3i) CIMC HUU ISO

No longer supported hardware

| Spine switch | N9K-C9336PQ |

| Modular spine switch line card | N9K-X9736PQ |

| Modular spine switch fabric module | N9K-C9504-FM N9K-C9508-FM N9K-C9516-FM |

| Leaf Switch | N9K-C93120TX N9K-C93128TX N9K-C9332PQ N9K-C9372PX N9K-C9372PX-E N9K-C9372TX N9K-C9372TX-E N9K-C9396PX N9K-C9396TX |

Member discussion